23 minutes read | published @ May 4, 2022

Coding the Byzantine Generals Problem

Leslie Lamport is one of my all-time favorite computer scientists. That said, something about most of his classical papers, such as Paxos and the Byzantine Generals Problem (BGP), makes it complicated to understand the details.

In addition, there’s a flood of non-technical takes on the BGP due to many people being interested in the non-technical parts of Bitcoin and other cryptocurrencies. It makes it hard to research the details of the problem from a Computer Science perspective.

At this point, I believe we all know, at a very high level, what the BGP is and its main conclusion: An analogy between software processes/nodes and the Byzantine generals trying to reach a consensus about something. Consensus is a hard problem, but it’s especially hard when the nodes, or the generals, can act adversarially. In other words, the other participant nodes of the network can stop responding due to a crash, but, worse, they can respond with wrong information (think bit flipping or other bugs) or with maliciously crafted information, as an adversary of a protocol would.

And then, after a lot of details in the paper, Leslie concludes that a Byzantine fault-tolerant system needs at least $3m + 1$ nodes to support at most $m$ faulty, or traitor/adversarial, nodes. Easy to work with the conclusion: if you have 4 nodes, you can only support 1 bad guy. If you have 2 bad guys in the system, then you’re gonna need 7 nodes, where 5 are honest. And so on. It’s important to note that this result is specifically for synchronous networks. This is a pretty important assumption. It changes a lot once we move to an asynchronous network model, which was solved in later works such as Practical Byzantine Fault-Tolerance by Miguel Castro and Barbara Liskov.

However, this post isn’t only about going over these results. I would like to dive into the details. Not only that, but we’re gonna write code to test some of the ideas on Leslie’s paper. I’m a big fan of building something to truly understand that thing. So let’s get started.

Context and goals are everything

One thing that helped me understand the proposed algorithm was to constantly go back and remember the main goals of the solution proposed by Leslie.

This snippet of the paper captures the high level perfectly:

We imagine that several divisions of the Byzantine army are camped outside an enemy city, each division commanded by its own general. The generals can communicate with one another only by messenger. After observing the enemy, they must decide upon a common plan of action. However, some of the generals may be traitors, trying to prevent the loyal generals from reaching agreement. The generals must have an algorithm to guarantee that

A. All loyal generals decide upon the same plan of action. The loyal generals will all do what the algorithm says they should, but the traitors may do anything they wish. The algorithm must guarantee condition A regardless of what the traitors do. The loyal generals should not only reach agreement, but should agree upon a reasonable plan. We therefore also want to insure that

B. A small number of traitors cannot cause the loyal generals to adopt a bad

plan.

After some discourse around those two points, he lays down a very important piece for us, the interactive consistencies:

Byzantine Generals Problem. A commanding general must send an order to

his $n - 1$ lieutenant generals such that:IC1. All loyal lieutenants obey the same order. IC2. If the commanding general is loyal, then every loyal lieutenant obeys the

order he sends.Conditions IC1 and IC2 are called the interactive consistency conditions. Note

that if the commander is loyal, then IC1 follows from IC2. However, the commander need not be loyal.To solve our original problem, the i-th general sends his value of

v(i)by using a solution to the Byzantine Generals Problem to send the order “usev(i)as my value”, with the other generals acting as the lieutenants.

There’s so much packed in this single passage. Personally, while writing the code to solve the BGP, I found myself very confused about many things, most of which were answered by referring back to the passage above.

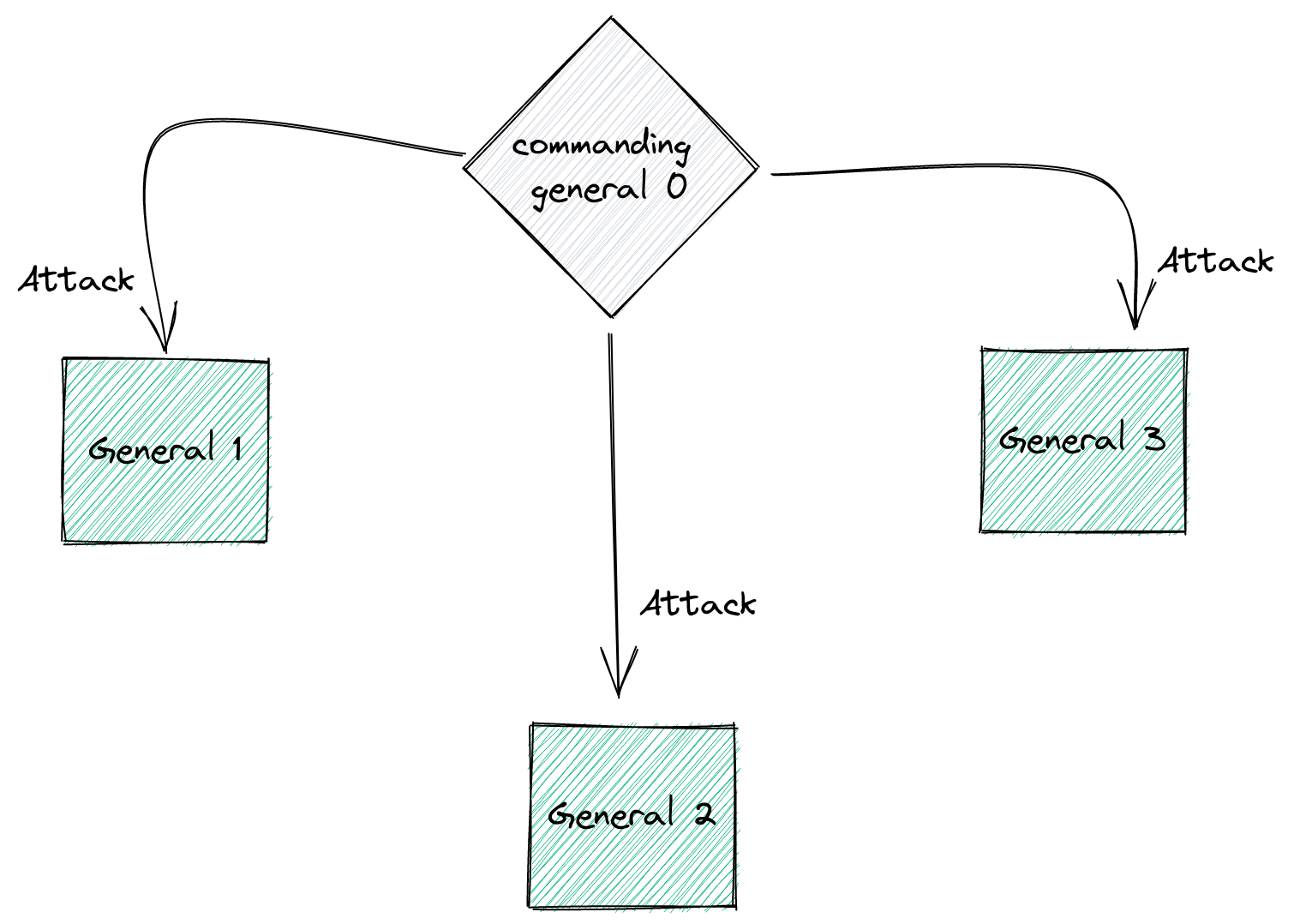

First, Leslie introduces the concept of commanding general. If we have 4 generals, at the beginning of the algorithm we’ll promote one of them to be the commanding general, sending orders to the other 3 generals, now called lieutenants.

Second, as the IC1 says, we care about all loyal (or non-faulty) generals (not the commanding general) following the same order. If we have 4 generals, 1 being a loyal commanding general, 2 loyal generals, and 1 traitor general, it doesn’t matter what the one traitor does; we care that the 2 loyal generals follow the order given by the loyal commander. Eventually, one can realize that’s the goal of most distributed consensus mechanisms.

Third, if the commanding general is loyal, then the order that all loyal generals are following is the same order given by the loyal commanding general. In the case of a traitor commanding general, all hope is lost.

Now, here’s one of the most, if not the most, important parts of this whole thing:

To solve our original problem, the i-th general sends his value of

v(i)by using a solution to the Byzantine Generals Problem to send the order “usev(i)as my value”, with the other generals acting as the lieutenants.

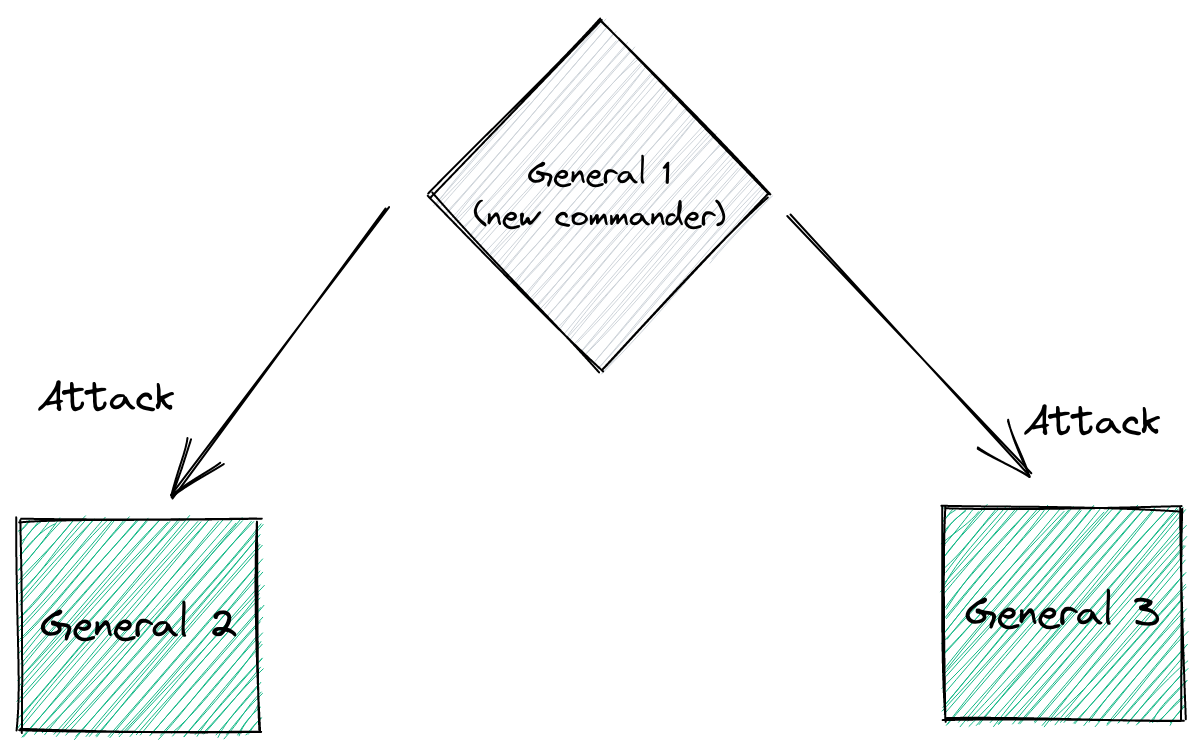

This is a recursive solution. Here’s a concrete example: If we have 4 generals, 1 being the commanding general, once the commander sends their value $i$ to the other 3 lieutenants, each lieutenant will act as a commander in the next iteration of the algorithm, but considering only $n-1$ generals.

On the first step of the algorithm, this is what we have:

Then, once the commanding general sends their order, there will be 3 more steps. Each of the 3 lieutenants will act as commander and send the message they received from the commanding general to the other 2 lieutenants. Meaning that the original commanding general is out of the picture for now:

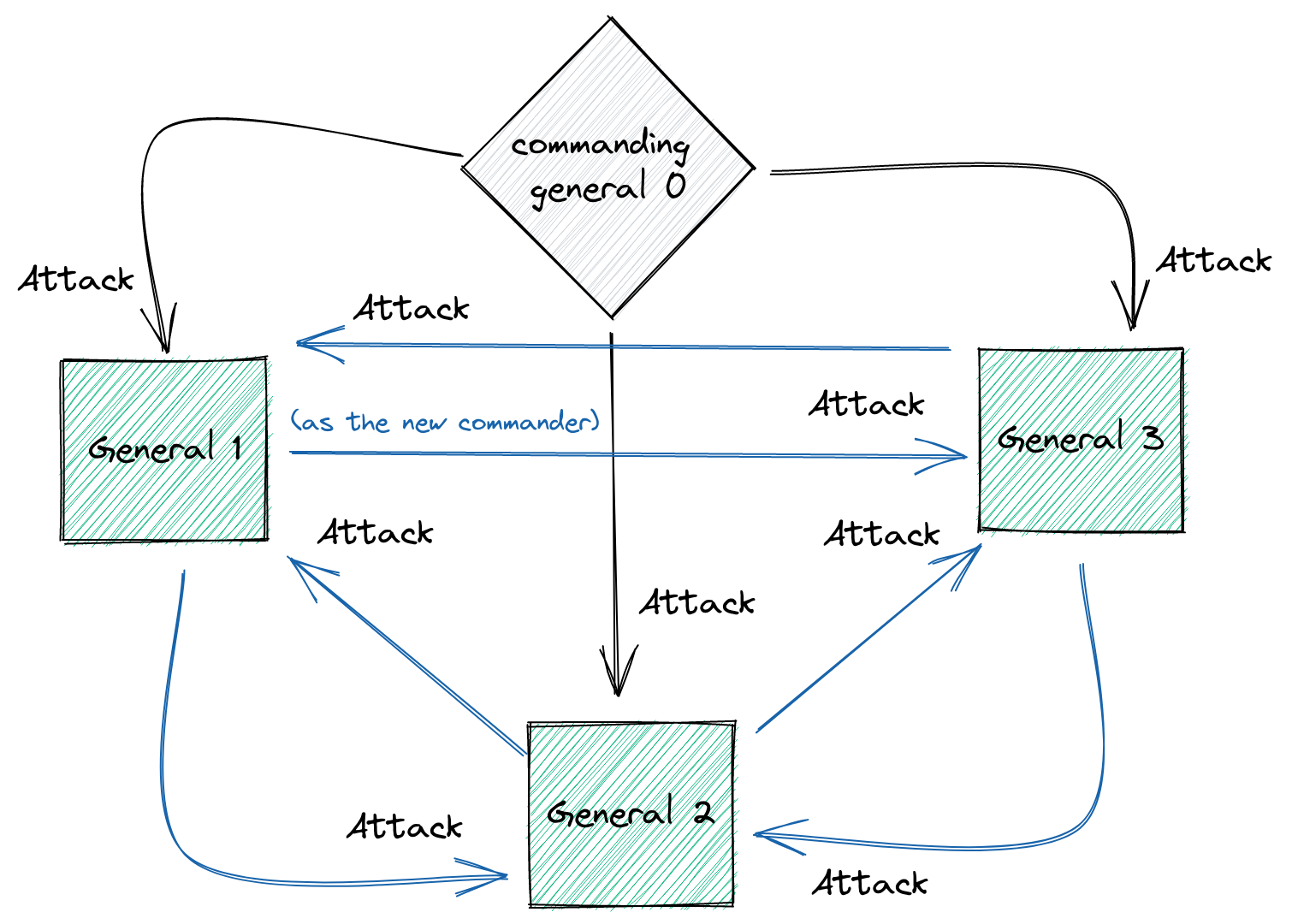

At the end, we have something like this:

And by doing this, something magical emerges: if you have $3m +1$ generals, you can tolerate $m$ traitors. Meaning:

- All loyal generals will follow the same order (IC1)

- If the commanding general is loyal, then every loyal lieutenant obeys the order he sends.

We haven’t seen the proper specs of the algorithm, but that paints the picture of what we want to achieve and the overall idea of how we want to achieve it.

The oral message algorithm

The first solution to this problem, as Leslie named it, is the Oral Message (OM) algorithm. Which will do what we described above.

A few relatively easy to understand assumptions are then made:

- Every message is delivered correctly;

- The receiver of the message knows who sent it;

- The absence of a message can be detected (can be a simple timeout).

The OM algorithm is defined as $OM(m)$, and that’s something that confused me, probably for silly reasons. It wasn’t clear the correlation between $m$ the number of traitors in the system and, as it is in the case of the algorithm, $m$ the level of recursion in the OM algorithm. So, just to clarify, the number of traitors that the algorithm can tolerate is the same as the recursion level in this algorithm. They’re the same $m$.

Leslie then formally describes the OM algorithm very succinctly:

- Algorithm $OM(0)$:

- (1) The commander sends his value to every lieutenant.

- (2) Each lieutenant uses the value he receives from the commander or uses the value RETREAT if he gets no value.

- Algorithm $OM(m)$, $m > 0$:

- (1) The commander sends his value to every lieutenant.

- (2) For each $i$, let $v(i)$ be the value Lieutenant $i$ receives from the commander. Lieutenant i acts as the commander in Algorithm $OM(m - 1)$ to send the value $v(i)$ to each of the $n - 2$ other lieutenants.

- (3) For each $i$, and each $j \neq i$, let $v(j)$ be the value Lieutenant $i$ received from Lieutenant $j$ in step (2) (using Algorithm $OM(m - 1)$). Lieutenant $i$ uses the value $majority(v_1,…v_{n-1})$.

It’s a short algorithm, but there’s so much packed into it. I lost count of how many times I had to re-read this thing while implementing the code.

The base case ($OM(0)$) is actually straightforward. The commander at a given round sends their value to every lieutenant general, and each lieutenant uses that value (e.g., either retreat or attack). But here’s a nice detail: by using the value, it doesn’t necessarily mean that the lieutenant will execute based on that value, not until the end of the algorithm. It will simply hold on to that value either as their decision (came directly from a commander) or as a set of all values received from other lieutenants, which will then be used to make a decision based on the majority in the that lieutenants' set of values.

Things become slightly harder to visualize and reason once we think about the actual recursive rounds ($OM(m)$).

First, the commander of that round will send their value to every lieutenant. “Their value” here deserves some attention. What value? Where did they get this value? If they’re the commanding general (the first commander), it’ll be the value that we want all lieutenants/nodes to agree upon. But if a lieutenant general got “promoted” to a commander in this round, it’ll be the value they received from the previous commander.

Then, in the actual recursive step, after the current commander sends their value to every lieutenant, each lieutenant, which doesn’t include the current commander will be “promoted” to a commander and execute the same algorithm, but now decrementing the $m$ (the recursion level), running $OM(m-1)$. By “to send the value $v(i)$ to each of the $n - 2$ other lieutenants,” Leslie means that the new commander sends the message to the lieutenants and doesn’t include itself and the previous commander.

A tricky bit here that may sound obvious but, once overlooked, can trigger some confusion is that this recursive step happens for each lieutenant in the round. This means the step (3) of the first round won’t be executed until all current lieutenants have acted as commanders and passed the $v(i)$, which might include more and more recursive steps!

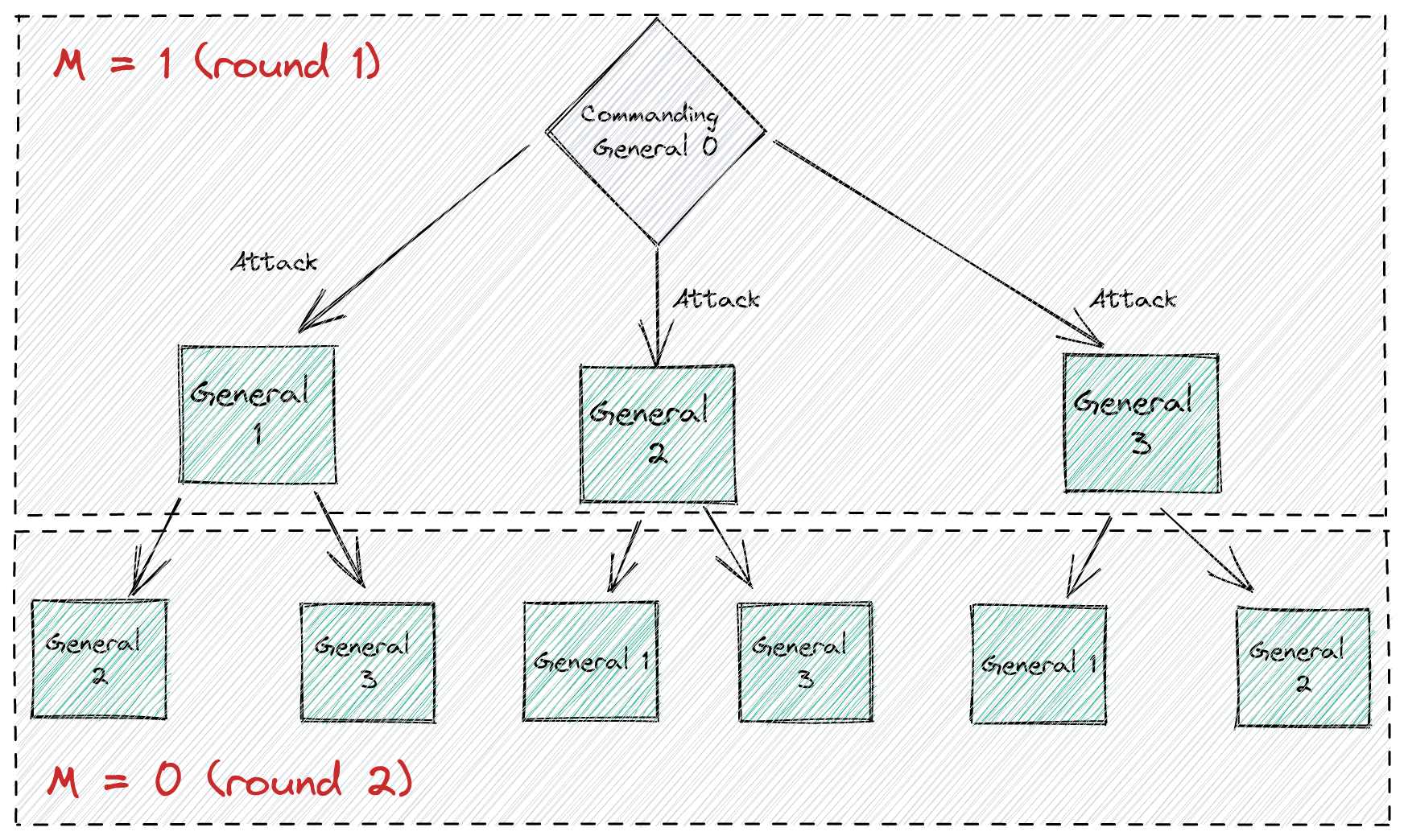

It might help to visualize this workflow as a tree, which actually is how some solutions are implemented:

And then, in step 3, after each lieutenant went into the recursive steps, a given lieutenant $i$ will use, as its decision, the majority value in the set of all the values they have received.

In other words, a commander in a certain round “spreads the word” and then waits to hear back from each lieutenant, then uses, as their own decision, the majority of these values they received from each lieutenant. This is a clever technique to overcome false information, i.e., “I could’ve heard something fake, so I’ll wait to hear back from the others before making my decision.”

Coding the Oral Message algorithm

Note: you can skip ahead and check the final code here

So what does the code look like? Let’s start with the smallest abstraction unit, a simple message that will hold a boolean value:

#[derive(Debug, Clone, Default, Copy)]

struct Message {

attack: bool,

}

A general lieutenant is also pretty simple to represent:

#[derive(Debug, Clone, Default)]

struct General {

id: usize,

messages: Vec<Message>,

is_traitor: bool,

decision: bool,

total_messages_received: usize,

}

Each general holds a list of messages they will receive and eventually will hold a final decision.

We’re going to use RNG to make m Generals with is_traitor set to true.

Also finally, total_messages_received is a field that could help with debugging and show some cool properties later on. Not really necessary for the algorithm.

Receiving an order is also very straightforward:

// Note: this is inside a `impl General {}` block

fn receive_order(&mut self, msg: Message, from: usize) {

if self.messages.is_empty() {

self.decision = msg.attack;

}

self.messages.push(msg);

self.total_messages_received += 1;

}

A bit of detail is that if the list of messages is empty, we’re gonna set the first message’s decision as this General’s decision.

Then, making the decision based on the majority is also very simple:

fn decide(&mut self) {

let msgs = self

.messages

.iter()

.map(|m| m.attack)

.collect::<Vec<bool>>();

let attack = msgs.iter().filter(|&attack| *attack).count();

let retreat = msgs.iter().filter(|&attack| !*attack).count();

if attack > retreat {

self.decision = true;

return;

}

self.decision = false;

}

Count number of trues and falses and decide based on the majority.

That’s pretty much it for the General. Oh, actually, there’s one more thing just to help us simulate faulty Generals; we need something to scramble orders if a given General instance is a traitor! If it’s not a traitor, then just return the original message.

fn next_order(&self, idx: usize) -> Message {

if self.is_traitor && idx % 2 == 0 {

if self.decision {

return Message { attack: false };

} else {

return Message { attack: true };

}

}

Message {

attack: self.decision,

}

}

Also, to make things more chaotic, we not only invert the order but only invert the order if a certain index is even. Pure evil. Now onto the hard bits!

The Oral Message algorithm will be built around the following struct:

struct OMAlgorithm {

// Both these fields will be used later on

is_first_commander_loyal: bool,

original_order: Message,

}

Now, Rust is going to make our lives a bit harder. Generals will hold a list of Messages, and this will be constantly updated as a General receives orders, and since this will be a recursive algorithm and we’ll be passing lists of references to Generals around to achieve memory safety, Rust must hurt us deeply. That’s okay, we’ll cope.

Since Generals will be passed around and mutated, we’ll have to use the Rc<RefCell<T>> pattern: Rc is a pointer with shared ownership while RefCell provides interior mutability. This means a given Rc<RefCell<General>> is shared, and each shared owner gets to mutate the contents (update their list of orders). The effect of mutating the contents will be seen by all of the shared owners of the outer Rc because the inner data is shared.

Sounds simple once it’s all laid out, but it took me a while to get to this solution, especially when you could do it much more easily in order languages, such as Go. But data races, though… Ugh, painful.

Also, it would be relatively easier if we were implementing an actual protocol. Each general would be its own process, and they would communicate over RPC or HTTP endpoints; we wouldn’t need to keep managing all these references.

So this is how the implementation will look like at a high level

impl OMAlgorithm {

fn om_algorithm(

&self,

generals: &[Rc<RefCell<General>>],

commander: Rc<RefCell<General>>,

m: usize,

) {

if m == 0 {

// OM(0):

} else {

// OM(m):

}

}

}

Where in the OM(m), m > 0 block we’ll be calling om_algorithm() recursively.

Let’s tackle the base case first:

impl OMAlgorithm {

fn om_algorithm(

&self,

generals: &[Rc<RefCell<General>>],

commander: Rc<RefCell<General>>,

m: usize,

) {

if m == 0 {

// OM(0):

// The commander sends his value to every lieutenant.

for (idx, general_rc) in generals.iter().enumerate() {

let msg_to_relay = commander.as_ref().borrow().next_order(idx);

let mut general = general_rc.borrow_mut();

general.receive_order(msg_to_relay, commander.as_ref().borrow().id);

}

} else {

// OM(1)

}

}

}

Note that we do:

let msg_to_relay = commander.as_ref().borrow().next_order(idx);

To decide whether we’re relaying the original message or a faulty one, in case the commander of this round is faulty.

Then, we mutably borrow a General from the list of Generals and call its receive_order. And that’s it; that’s the whole base case, as stated by Leslie:

OM(0): The commander sends his value to every lieutenant

Then, the $OM(m), m > 0$ case has three parts:

- First, the commander of the round sends a message to every lieutenant

- In our code, this means the commander is the

commander: Rc<RefCell<General>>in the function argument of this specific invocation ofom_algorithm(), and the lieutenants are the list we’ve received in this function call, i.e. thegenerals: &[Rc<RefCell<General>>]

- In our code, this means the commander is the

- Second, for each

Generallieutenant ingenerals: &[Rc<RefCell<General>>], we’ll be callingom_algorithm, again, but now passing in list ofGenerals without the current commander, and the new commander being thisGeneral(the current one in the “for each”)- This has interesting behaviors, as it’s the recursive step. It might take some time to click.

- Lastly, and putting it in the simplest way: for each general in this scope’s

generals, we’ll “ask” them to decide the final order based on the majority each has in their local list of orders.

It’s much easier to see in code, actually.

impl OMAlgorithm {

fn om_algorithm(

&self,

generals: &[Rc<RefCell<General>>],

commander: Rc<RefCell<General>>,

m: usize,

) {

if m == 0 {

// OM(0):

// The commander sends his value to every lieutenant.

for (idx, general_rc) in generals.iter().enumerate() {

let msg_to_relay = commander.as_ref().borrow().next_order(idx);

let mut general = general_rc.borrow_mut();

general.receive_order(msg_to_relay, commander.as_ref().borrow().id);

}

} else {

// OM(1) (1):

// The commander sends his value to every lieutenant.

for (idx, general_rc) in generals.iter().enumerate() {

let msg_to_relay = commander.as_ref().borrow().next_order(idx);

let mut general = general_rc.borrow_mut();

general.receive_order(msg_to_relay, commander.as_ref().borrow().id);

}

// OM(1) (2):

// For each i, let v(i) be the value Lieutenant i receives

// from the commander, or else be RETREAT if he

// receives no value.

// Lieutenant i acts as the commander in Algorithm OM(m-1)

// to send the value v(i) to each of the n-2 other lieutenants.

for general_rc in generals {

let next_commander = general_rc.as_ref().borrow();

let mut new_generals: Vec<Rc<RefCell<General>>> = vec![];

for general in generals {

if general.as_ref().borrow().id != next_commander.id {

new_generals.push(Rc::clone(general));

}

}

drop(next_commander);

// Sending message v(i) received from the commander

self.om_algorithm(&new_generals, Rc::clone(general_rc), m - 1);

}

// (3) For each i, and each j != i, let v(j) be the value Lieutenant i

// received from Lieutenant j in step (2) using Algorithm OM(m - 1),

// or else RETREAT if he received no such value.

// Lieutenant i uses the value majority (vl, ..., vn-1 ).

// Note: honestly, this was the most subtle part of the whole algorithm...

// Each general is updating their beliefs based on what they heard so far.

for general in generals {

general.borrow_mut().decide();

}

}

}

}

And that’s it! It’s short, but it has a lot of details that will be more apparent the more you look at and reason about the code.

But, now, how do we decide whether the algorithm was successful? That’s a step that can be tricky to debug if you get it wrong. To decide that, we have to refer back to the Interactive Inconsistencies laid out by Leslie at the beginning of the paper:

IC1: All loyal lieutenants obey the same order. IC2: If the commanding general is loyal, then every loyal

fn was_successful(&self, generals: &[Rc<RefCell<General>>]) -> bool {

// Here's how Lamport defines success:

// IC1: All loyal lieutenants obey the same order.

// IC2: If the commanding general is loyal, then every loyal

// lieutenant obeys the order he sends.

// Decision of each loyal general

let mut loyal_decisions = vec![];

for general in generals {

let general = general.as_ref().borrow();

if !general.is_traitor {

loyal_decisions.push(general.decision)

}

}

// Was there consensus amongst all loyal generals?

let loyal_consensus = loyal_decisions.windows(2).all(|w| w[0] == w[1]);

// If not, we've broken IC1

if !loyal_consensus {

return false;

}

// If we had consensus among loyal generals and the first commander

// is loyal, does the consensus match the original order?

// If it doesn't, we've broken IC2.

if self.is_first_commander_loyal {

// Then the loyal consensus should match original order

if self.original_order.attack != loyal_decisions[0] {

return false;

}

}

// IC1 and IC2 hold

true

}

In short, was_successful is just following Leslie’s 2 Interactive Consistencies.

Now, all we have to do is set up a nice environment for some experiments, which include:

- Creating many generals;

- Turning

mgenerals into traitors; - Selecting one of these generals as the initial commanding general;

- Running the algorithm with this setup;

- Checking if it

was_suceessful().

Also, it would be nice to run this experiment many times, letting some RNG pick different combinations of traitors and commanding generals. This is simple enough, though, so I’ll just lay out the code:

fn main() {

// Configuration params

let num_of_generals = 4;

let num_of_traitors = 1;

let m = num_of_traitors;

let num_of_experiments = 10;

for _i in 0..num_of_experiments {

let mut generals: Vec<Rc<RefCell<General>>> = vec![];

// Create all generals, set `is_traitor` as false initially

for i in 0..num_of_generals {

let g = Rc::new(RefCell::new(General::new(i + 1, false)));

generals.push(g);

}

// Pick `num_of_traitors` random generals and set them as traitors

// Note that this could also be the first commanding general

generals

.choose_multiple(&mut rand::thread_rng(), num_of_traitors)

.for_each(|traitor| {

println!("Traitor: General #{}\n", traitor.as_ref().borrow().id);

traitor.as_ref().borrow_mut().is_traitor = true;

});

let order = Message { attack: true };

let mut om_algorithm = OMAlgorithm {

is_first_commander_loyal: true,

original_order: order,

};

// Randomly pick first commanding general

let first_commander = rand::thread_rng().gen_range(0..num_of_generals);

let first_commander_rc = Rc::clone(&generals[first_commander]);

first_commander_rc.borrow_mut().decision = order.attack;

// Remove first commanding general out of the initial list of lieutenant generals

generals.remove(first_commander);

if first_commander_rc.as_ref().borrow().is_traitor {

om_algorithm.is_first_commander_loyal = false

}

// Start the algorithm

om_algorithm.om_algorithm(&generals, first_commander_rc, m);

// Calculate total messages sent for analytical purposes

om_algorithm.get_total_messages(&generals);

// Check whether it was successful

let successful = om_algorithm.was_successful(&generals);

println!("successful: {:?}\n", successful);

if !successful {

println!("Found a case where consensus isn't achieved\n");

break;

}

}

}

Now we can play with different parameters and see how it affects the results. For instance, with 4 generals and 1 traitor ($m = 1$):

Traitor: General #4

I'm commander #3. Sending message: true. m: 1

General #1's first order received: true

General #1 receiving order (true) from commander #3

General #2's first order received: true

General #2 receiving order (true) from commander #3

General #4's first order received: true

General #4 receiving order (true) from commander #3

### general #1 acting as commander ###

I'm commander #1. Sending message: true. m: 0

General #2 receiving order (true) from commander #1

General #4 receiving order (true) from commander #1

General #1 decision after round OM(0): true

### general #2 acting as commander ###

I'm commander #2. Sending message: true. m: 0

General #1 receiving order (true) from commander #2

General #4 receiving order (true) from commander #2

General #2 decision after round OM(0): true

### general #4 acting as commander ###

I'm commander #4. Sending message: true. m: 0

General #1 receiving order (false) from commander #4

General #2 receiving order (true) from commander #4

General #4 decision after round OM(0): true

deciding for general #1

Deciding majority for general #1: [true, true, false]

deciding for general #2

Deciding majority for general #2: [true, true, true]

deciding for general #4

Deciding majority for general #4: [true, true, true]

General #3 decision after round OM(1): true

total_messages: 9

successful: true

Note that this includes many more log messages than the code shown above. I thought it was really cool seeing the log messages as it helped a lot to visualize how the algorithm is behaving, just like a tree! You can find this version on the GitHub repo.

Let’s try 4 generals and 2 traitors ($m = 2$). Leslie states that it would be impossible to succeed given these assumptions (sync network model, permissioned, no cryptography), as we wouldn’t follow the $3m + 1$ bound. In other words, we can only succeed if we have $f \geq 3m + 1$, where $f$ is the number of generals and $m$ is the number of traitors. If we use $m = 2$, we’ll have

$$ f \geq 3*2 + 1 $$

$$

f \geq 6 + 1 \

$$

$$

f \geq 7 \

$$

We would need at least 7 generals in order to survive 2 traitors.

Let’s see how the algorithm behaves with 4 generals and 2 traitors:

Traitor: General #3

Traitor: General #4

I'm commander #1. Sending message: true. m: 2

General #2's first order received: true

General #2 receiving order (true) from commander #1

General #3's first order received: true

General #3 receiving order (true) from commander #1

General #4's first order received: true

General #4 receiving order (true) from commander #1

### general #2 acting as commander ###

I'm commander #2. Sending message: true. m: 1

General #3 receiving order (true) from commander #2

General #4 receiving order (true) from commander #2

### general #3 acting as commander ###

I'm commander #3. Sending message: true. m: 0

General #4 receiving order (false) from commander #3

General #3 decision after round OM(0): true

### general #4 acting as commander ###

I'm commander #4. Sending message: true. m: 0

General #3 receiving order (false) from commander #4

General #4 decision after round OM(0): true

deciding for general #3

Deciding majority for general #3: [true, true, false]

deciding for general #4

Deciding majority for general #4: [true, true, false]

General #2 decision after round OM(1): true

### general #3 acting as commander ###

I'm commander #3. Sending message: true. m: 1

General #2 receiving order (false) from commander #3

General #4 receiving order (true) from commander #3

### general #2 acting as commander ###

I'm commander #2. Sending message: true. m: 0

General #4 receiving order (true) from commander #2

General #2 decision after round OM(0): true

### general #4 acting as commander ###

I'm commander #4. Sending message: true. m: 0

General #2 receiving order (false) from commander #4

General #4 decision after round OM(0): true

deciding for general #2

Deciding majority for general #2: [true, false, false]

deciding for general #4

Deciding majority for general #4: [true, true, false, true, true]

General #3 decision after round OM(1): true

### general #4 acting as commander ###

I'm commander #4. Sending message: true. m: 1

General #2 receiving order (false) from commander #4

General #3 receiving order (true) from commander #4

### general #2 acting as commander ###

I'm commander #2. Sending message: false. m: 0

General #3 receiving order (false) from commander #2

General #2 decision after round OM(0): false

### general #3 acting as commander ###

I'm commander #3. Sending message: true. m: 0

General #2 receiving order (false) from commander #3

General #3 decision after round OM(0): true

deciding for general #2

Deciding majority for general #2: [true, false, false, false, false]

deciding for general #3

Deciding majority for general #3: [true, true, false, true, false]

General #4 decision after round OM(1): true

deciding for general #2

Deciding majority for general #2: [true, false, false, false, false]

deciding for general #3

Deciding majority for general #3: [true, true, false, true, false]

deciding for general #4

Deciding majority for general #4: [true, true, false, true, true]

General #1 decision after round OM(2): true

total_messages: 15

successful: false

Found a case where consensus isn't achieved

Well, I guess Leslie was right after all?

Change num_of_generals to 7, and it will succeed. Pretty cool, uh?

Where to go from here

Just sign the message, for god’s sake!

The problem and solution above are really fun and all. But, come on, it’s 2022, and we have cryptography. Why are we allowing messages to be forged by the traitors?

Removing the ability to forge messages simplifies the BGP a lot. The message looks like v:j:i where v is the value (e.g., either attack or retreat, true or false), signed by j, and then signed by `i'.

The implementation of this approach is really dependent on the actual business logic of the system, so I won’t be doing that now. But from the A1-A4 assumptions on the original paper, we can simplify the phrasing that states that to implement a BGP with signed messages, one must guarantee that:

- A1: Every message that is sent is delivered correctly;

- A2: The receiver of a message knows who sent it;

- A3: The absence of a message can be detected;

- A4: A loyal general’s signature cannot be forged, and any alteration of the contents of his signed messages can be detected. Anyone can verify the authenticity of a general’s signature.

And the end result with PKI (private-key infrastructure, cryptographically secure signatures) is that, instead of $f \geq 3m + 1$, it can tolerate up to 50% (or $n/2$) of faulty nodes, hence the famous 51% attack. A much better result by modifying the algorithm slightly to include signatures. Cryptography is awesome, isn’t it?

The coding exercise above was really nice to build intuition on the problem and its first solution, but the rabbit hole is really deep. One can continue by implementing signatures on the solution described here. Then, slowly relaxing other assumptions (synchronicity, permission model), making the problem and solution harder but more powerful and practical.